How to Store Python Apps Logs in DBFS and Volumes in Databricks

Detailed Steps to Store Python Application Logs in DBFS and Volumes in Databricks Using FileHandler

When developing Python-based applications or frameworks in Databricks, it’s often necessary to store logs in DBFS or Volumes (available in Unity Catalog enabled workspaces) for traceability and future analysis. This blog will show how to store logs from custom Python applications or frameworks in DBFS or Volumes to meet these needs.

In this post, we’ll focus on storing logs from Databricks applications or frameworks in DBFS or Volumes, though the same method can be applied to Python applications running on Windows, Mac, or Linux to save logs in local file systems.

The Python logging module, part of the Python Standard Library, offers a powerful and customizable solution for managing logs in Python applications. It is easy to use yet highly configurable, providing developers with the control needed for their logging requirements. We’ll use the FileHandler to store logs in a file.

While we won’t cover the basics of Python logging in this post, we’ll dive straight into how to save log files to the local file system. For a detailed introduction to Python logging, you can refer to this blog.

File Handler

The File Handler in Python’s logging module allows log messages to be written to a file instead of, or in addition to, the console.

The log file can be written in two modes:

- Append mode (default): It keeps adding log messages to the existing file.

- Write mode: It overwrites the log file each time the program runs.

Implementation

1. Import Modules

First, let’s import the necessary Python modules.

# Import the modules

import logging

from datetime import datetime

import time2. Configure Logging

This function sets up logging and returns a logger that can be used to log messages.

def configure_logging(app_name: str) -> logging.Logger:

"""

Function to configure logging for the application

@Param: app_name (str): Name of the application

@return: logger (logging.Logger): Returns the created logger

"""

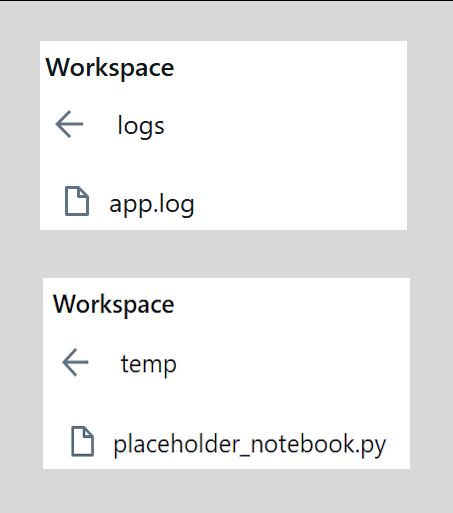

# Create directory to save log files

dbutils.fs.mkdirs("dbfs:/FileStore/logs/Test/")

# Define the name of the log file

current_timestamp = datetime.now().strftime("%Y%m%d%H%M%S")

log_file = f"/dbfs/FileStore/logs/Test/{app_name}_{current_timestamp}.log"

# Configure logging

logging_format = '%(name)s:%(asctime)s:%(levelname)s:%(message)s'

logging.basicConfig(format=logging_format)

logger=logging.getLogger(app_name)

logger.setLevel(logging.INFO)

# Create the file handler to save the log file

log_file_handler = logging.FileHandler(log_file)

# Create a formatter and add it to the file handler

formatter = logging.Formatter(logging_format, datefmt="%Y-%m-%d %H:%M:%S")

log_file_handler.setFormatter(formatter)

# Add file handler to the logger

logger.addHandler(log_file_handler)

return logger3. Shutdown Logging

At the end of the application, we will use this function to remove handlers and shut down the logging system.

def shutdown_logging(logger: logging.Logger):

"""

Function to clear log handlers and shutdown logging

@return: None

"""

# Flush the log handlers

for handler in logger.handlers:

handler.flush()

# Clear logger handlers

logger.handlers.clear()

# Shutdown logging

logging.shutdown()4. Custom Application

Below is the sample application for demonstration purposes.

def dummy_custom_application(logger: logging.Logger):

# Test log messages

logger.debug('This is a debug message')

logger.info('This is an info message')

logger.warning('This is a warning message')

logger.error('This is an error message')

logger.critical('This is a critical message')

# Dummy application logic

for i in range(1,6):

logger.info(f"This is a custom message {i}")

time.sleep(10)5. Run Application

Now, let’s run the application.

# Configure logging

logger = configure_logging("Test_Application")

try:

# Run the custom application

dummy_custom_application(logger)

except Exception as e:

# Handle any exceptions

logger.exception(f"Error occurred in the application execution. Error message: {e}")

finally:

# Shutdown logging

shutdown_logging(logger)6. Log Messages

Here are the log messages from the log file generated during the application’s execution.

Test_Application:2024-10-11 11:28:28:INFO:This is an info message

Test_Application:2024-10-11 11:28:28:WARNING:This is a warning message

Test_Application:2024-10-11 11:28:28:ERROR:This is an error message

Test_Application:2024-10-11 11:28:28:CRITICAL:This is a critical message

Test_Application:2024-10-11 11:28:28:INFO:This is a custom message 1

Test_Application:2024-10-11 11:28:38:INFO:This is a custom message 2

Test_Application:2024-10-11 11:28:48:INFO:This is a custom message 3

Test_Application:2024-10-11 11:28:58:INFO:This is a custom message 4

Test_Application:2024-10-11 11:29:08:INFO:This is a custom message 5Complete Solution

# Import the modules

import logging

from datetime import datetime

import time

def configure_logging(app_name: str) -> logging.Logger:

"""

Function to configure logging for the application

@Param: app_name (str): Name of the application

@return: logger (logging.Logger): Returns the created logger

"""

# Create directory to save log files

dbutils.fs.mkdirs("dbfs:/FileStore/logs/Test/")

# Define the name of the log file

current_timestamp = datetime.now().strftime("%Y%m%d%H%M%S")

log_file = f"/dbfs/FileStore/logs/Test/{app_name}_{current_timestamp}.log"

# Configure logging

logging_format = '%(name)s:%(asctime)s:%(levelname)s:%(message)s'

logging.basicConfig(format=logging_format)

logger=logging.getLogger(app_name)

logger.setLevel(logging.INFO)

# Create the file handler to save the log file

log_file_handler = logging.FileHandler(log_file)

# Create a formatter and add it to the file handler

formatter = logging.Formatter(logging_format, datefmt="%Y-%m-%d %H:%M:%S")

log_file_handler.setFormatter(formatter)

# Add file handler to the logger

logger.addHandler(log_file_handler)

return logger

def shutdown_logging(logger: logging.Logger):

"""

Function to clear log handlers and shutdown logging

@return: None

"""

# Flush the log handlers

for handler in logger.handlers:

handler.flush()

# Clear logger handlers

logger.handlers.clear()

# Shutdown logging

logging.shutdown()

def dummy_custom_application(logger: logging.Logger):

# Test log messages

logger.debug('This is a debug message')

logger.info('This is an info message')

logger.warning('This is a warning message')

logger.error('This is an error message')

logger.critical('This is a critical message')

# Dummy application logic

for i in range(1,6):

logger.info(f"This is a custom message {i}")

time.sleep(10)

# Configure logging

logger = configure_logging("Test_Application")

try:

# Run the custom application

dummy_custom_application(logger)

except Exception as e:

# Handle any exceptions

logger.exception(f"Error occurred in the application execution. Error message: {e}")

finally:

# Shutdown logging

shutdown_logging(logger)Conclusion

Effectively managing log files is essential for monitoring and analyzing the performance of Python applications. In this blog, we explored how to store logs in DBFS or Volumes in Databricks, providing a robust solution for traceability and future analysis. By utilizing the Python logging module and its FileHandler, we can easily configure logging to capture important events and errors within our applications. This practice not only enhances debugging but also contributes to better application maintenance and performance monitoring.

Thank you for reading! If you enjoyed this post and want to show your support, here’s how you can help:

- Clap 👏 and share your thoughts 💬 in the comments below.

- Follow me on Medium for more content.

- Follow me on LinkedIn.

- Join my email list to ensure you never miss an article.

- Follow the Data Engineer Things publication for more stories like this one.